Cutting to the chase: automate AI brand tracking, continuous AI monitoring, and real-time AI visibility alerts are not optional anymore. They are operational controls. This article gives a comparison framework you can use to choose between options, with a data-driven lens and pragmatic recommendations. Expect analogies, a decision matrix, and clear criteria you can apply to your organization.

Foundational understanding: what you actually need

Before choosing a path, be precise about what "AI visibility monitoring" means for you. At minimum, you want three capabilities:

- Automated AI brand tracking — continuous discovery of where your brand or models are being used, quoted, or misrepresented. Continuous AI monitoring — ongoing telemetry of model outputs, downstream behavior, and third-party usage signals. Real-time AI visibility alerts — notifications when thresholds, anomalies, or policy violations occur.

Think of these capabilities like a home security system. Brand tracking is the neighborhood watch—who's talking about you. Continuous monitoring is the security cameras—constant recording and analytics. Real-time alerts are the alarm system—instant notification when a sensor trips. You can install all three yourself, hire a company to do it, or do a mix of both.

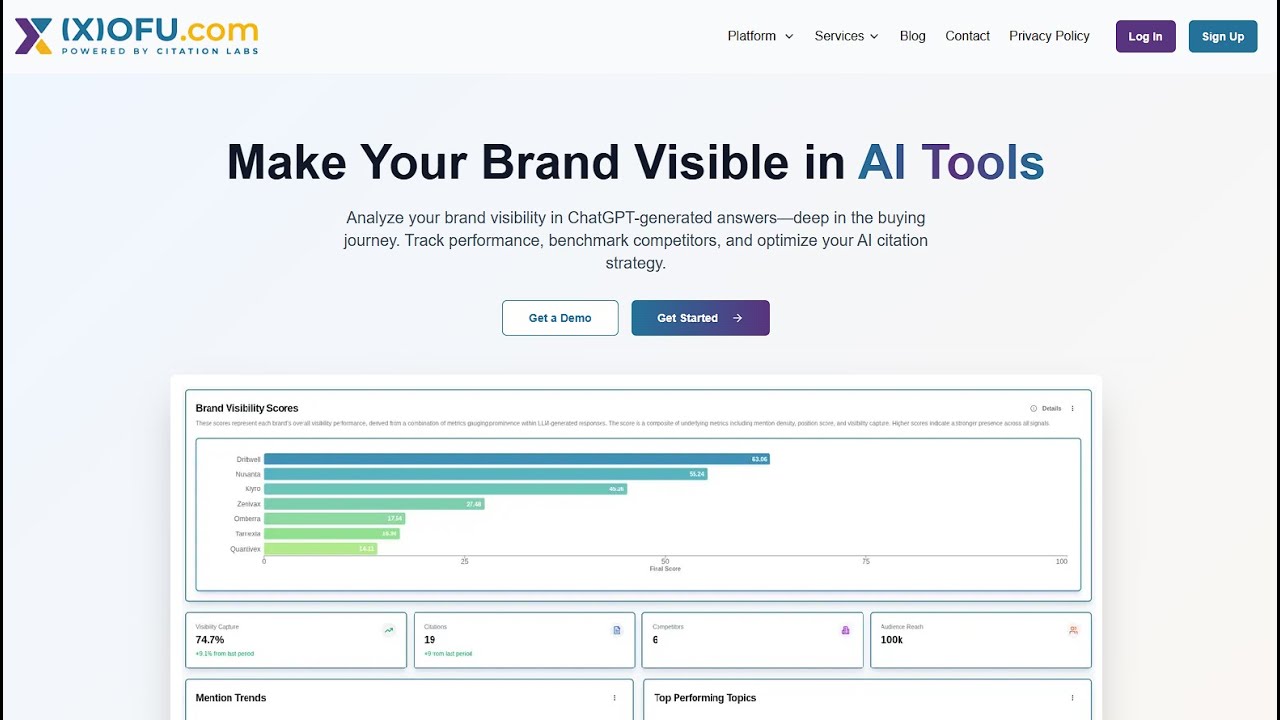

[Screenshot placeholder: Example dashboard showing real-time AI visibility alerts and recent brand mentions]

1. Establish comparison criteria

Use measurable criteria to compare options. Below are pragmatic criteria aligned to business outcomes and operational constraints:

Detection accuracy — precision and recall for brand mentions, model misuse, or anomalies. Latency — how quickly the system detects and alerts (seconds, minutes, hours). Coverage — channels monitored (web, social, dark web, APIs, partners), languages, and geographies. Customization — ability to tune detectors, label policies, and integrate proprietary signals. Cost — TCO including engineering hours, SaaS fees, data costs, and incident handling. Time to value — how long until you have reliable, actionable alerts. Security & compliance — data residency, encryption, audit trails, and privacy controls. Operational burden — staffing and processes required to maintain and act on alerts.These criteria feed into a decision matrix and should be weighted based on your priorities. For example, highly regulated firms often put security & compliance and detection accuracy at top weight; fast-moving consumer brands may prioritize latency and coverage.

2. Option A — Build in-house (pros and cons)

What it looks like

Build a custom stack: crawlers/scrapers, API integrations, embedding models for entity recognition, a streaming pipeline, alerting rules, and a dashboard. You'll own ingestion, models, detection logic, storage, and UIs.

Pros

- Customization: Full control over detection logic and thresholds tailored to your brand and risk posture. Data ownership: Sensitive signals remain in your environment; easier to meet compliance controls. Integrations: Deep integration with internal ticketing, SIEM, and governance tooling. Potential cost efficiency at scale: Over time and at scale, unit cost per alert can be lower if you already have platform investments.

Cons

- Time to value: Initial deployment can take months; reliable detection may take quarters. Engineering burden: Requires dedicated data engineering, ML, security, and SRE resources. Maintenance: Rule drift, concept drift, and platform upgrades become your responsibility. Coverage gaps: Building integrations to third-party platforms (closed APIs, social networks) can be slow or impossible.

In contrast to off-the-shelf solutions, the in-house option trades velocity for control. If your brand or models expose you to high regulatory risk and you have engineering capacity, building can make sense. Similarly, if you have unique signals (proprietary telemetry, partner logs), build lets you surface those directly.

3. Option B — Buy a SaaS/managed solution (pros and cons)

What it looks like

Subscribe to a vendor that offers AI visibility monitoring: they provide connectors to social, web, APIs, embed detection models, and deliver dashboards and alerting. Managed options include on-call analysts and incident triage.

Pros

- Speed: Deploy in days or weeks with immediate coverage across many channels. Lower operational load: Vendor handles model updates, scraping rules, and maintenance. Broad coverage: Vendors often have prebuilt integrations to platforms you cannot easily access in-house. Benchmarking: You get comparative signals across industries (helpful to validate anomalies).

Cons

- Less customization: Out-of-the-box rules may not fit niche brand nuances. Data residency and compliance: Sending sensitive telemetry to a third party can create regulatory friction. Vendor lock-in and cost scaling: Volume-based pricing for detections and alerts can become expensive as you scale. Openness: Limited ability to audit model internals or training data that generate alerts.

On the other hand, vendors accelerate time to value and reduce internal headcount requirements. For teams that need immediate, broad visibility and lack mature platform teams, SaaS is frequently the pragmatic choice.

4. Option C — Hybrid (pros and cons)

What it looks like

A https://privatebin.net/?971f7fa4fe24a9d7#7qEBi9fgytWGa2wNgYuPw7SqTyDX3LnY74WpMSqRUeuc hybrid approach combines vendor coverage for broad surface area with in-house detection for sensitive or high-value signals. Typically, you forward vendor alerts into your internal triage pipeline, and retain local processing for regulated telemetry.

Pros

- Best of both: Broad vendor coverage with in-house control of sensitive processes. Incremental investment: Rapid coverage now, bespoke detectors later as use cases mature. Risk mitigation: Reduces vendor data exposure while still leveraging external scale. Faster learning: Vendor data helps bootstrap in-house models and rules.

Cons

- Operational complexity: Two systems to reconcile, duplicate alerts, and integration overhead. Costs in both places: You pay for vendor services and internal engineering time. Coordination: Requires clear ownership and playbooks to avoid gaps or alert fatigue.

Similarly to how enterprises secure physical facilities—outsourced guard services plus internal access control—the hybrid approach balances scale and control. In contrast to pure options, it reduces single points of failure while increasing orchestration needs.

5. Decision matrix

Criteria Option A: Build (In-house) Option B: Buy (SaaS) Option C: Hybrid Detection accuracy High (if tuned) — requires training data Good — vendor models tuned across customers High overall — vendor for breadth, in-house for critical cases Latency Variable — depends on infra Low (near real-time) Low for vendor signals; variable for in-house Coverage Limited initially — expands with effort Broad across channels & languages Broad + targeted deep coverage Customization Very high Moderate High Time to value Slow (months) Fast (days-weeks) Fast to start, slow to fully optimize Cost High upfront; lower at scale (engineering-heavy) Ongoing subscription; scales with volume Mixed — subscription + engineering Security & compliance Easiest to control Depends on vendor certifications Balanced — keep sensitive data internal Operational burden High Low Moderate6. Clear recommendations (decision rules)

Below are decision rules based on company size, risk profile, and available resources. Use them as prescriptive guidelines rather than dogma.

If you are a startup or growing digital brand (low compliance burden)

- Recommendation: Start with SaaS (Option B). Why: Fast coverage, low ops cost, and quick alerts let you iterate on DM/PR playbooks and product gating. When to move: If alert volume or data sensitivity grows, transition to hybrid to keep critical signals in-house.

If you are a regulated enterprise (finance, healthcare, government)

- Recommendation: Hybrid (Option C) with prioritized in-house detection for regulated data and vendor for broad monitoring. Why: Compliance constraints often require data residency and auditability while still needing broad surface coverage. Implementation tip: Use vendor outputs as a signal layer but route sensitive telemetry to your SIEM and keep forensic artifacts on-prem.

If you have a large platform or custom models and a mature ML/infra team

- Recommendation: Build in-house (Option A), or hybrid during transition. Why: Unique telemetry and proprietary models need bespoke detectors and full ownership of mitigation flows. Implementation tip: Invest in labeling pipelines, continuous evaluation (precision/recall dashboards), and drift detection.

Operational playbook—practical steps to deploy any option

Regardless of the option you choose, follow a short, repeatable playbook to get to reliable alerts quickly:

Define priority signals (brand phrases, model IDs, negative behaviors) and label definitions. Choose the minimal channel set for pilot (e.g., public web + Twitter + product telemetry). Implement an initial detection model or vendor connector and set conservative thresholds to avoid alert fatigue. Establish an SLA and triage workflow: who investigates alerts, how to escalate, and how to remediate. Measure key metrics: precision, recall, mean time to detect (MTTD), mean time to remediate (MTTR), and false positive rate. Iterate based on metrics: lower false positives, expand coverage, and automate playbooks for common incidents.

Analogously, treat early deployment like testing fire drills—start with low-risk incidents and tighten the system as you validate that alerts are accurate and actionable.

Metrics that prove value (what to measure)

Proof-focused monitoring means quantifying both detection quality and business impact. Track a small set of metrics daily/weekly:

- Detection precision and recall — show how many alerts were true positives versus missed incidents. MTTD and MTTR — measure real-time alerting value. False positive rate per alert source — helps tune thresholds and avoid fatigue. Cost per incident investigated — ties monitoring spend to operational cost. Coverage percentage — percent of prioritized channels and geographies monitored.

For example, if you deploy a SaaS monitor and see MTTD drop from 6 hours to 10 minutes and MTTR from 4 hours to 1 hour, that's quantifiable value. In contrast, if precision is 20% with high false positives, the operational cost may outweigh latency gains.

Common pitfalls and how to avoid them

- Alert fatigue: Tune thresholds and introduce multi-signal correlation (e.g., a mention plus a suspicious API call) before escalating. Overfitting detectors: Avoid rules that only catch past incidents; validate on future or held-out data. Vendor blind spots: If you buy, ask for evidence of channel coverage and sample detections for your brand before signing. Underestimating maintenance: All systems drift; schedule quarterly audits and model retraining cycles.

Final recommendation summary

Decide based on three axes: risk (compliance, brand exposure), speed (need for immediate coverage), and capability (engineering and ML maturity).

- If you need fast, broad coverage and low operational overhead: Buy (SaaS). If you require strict compliance, deep customization, or own unique telemetry: Build (in-house). If you need both scale today and control over critical signals: Hybrid — start with vendor coverage, then selectively bring key detectors in-house.

In contrast to alarmist predictions, the data shows no universal winner. Each option is a trade-off. Similarly, the optimal path for many organizations is iterative: start with broad visibility to reduce detection latency, then invest in bespoke detectors where cost and risk demand it.

[Screenshot placeholder: Decision matrix applied to a sample company showing recommended path and expected metrics improvements]

One last metaphor

Think of automated AI visibility monitoring as building situational awareness: a good system is a layered defense—satellite imagery (vendor breadth), CCTV (in-house telemetry), and patrols (human triage). Relying on a single layer leaves blind spots. Combining layers gives you both speed and precision.

Use the comparison framework and decision matrix above, measure the right metrics, and iterate. Real-time AI visibility alerts are valuable only when they are accurate and actionable; prioritize those qualities over feature lists.